The artificial intelligence landscape is experiencing a groundbreaking transformation as scientists successfully develop brain-like computers that could revolutionize how smartphones process information. This innovative technology, known as neuromorphic computing, represents a fundamental shift from traditional computing architecture to systems that mimic the human brain’s neural networks. Unlike conventional processors that require massive data centers and constant internet connectivity, these neuromorphic chips promise to bring sophisticated self-learning AI capabilities directly to mobile devices.

The implications of this breakthrough extend far beyond mere technological advancement. Imagine a smartphone that learns your habits, adapts to your preferences, and makes intelligent decisions without sending your data to remote servers. This isn’t science fiction—it’s the emerging reality of brain-inspired computing. Researchers have made significant strides in creating processors that operate with the energy efficiency of biological neurons while performing complex cognitive tasks that previously required supercomputers.

Traditional computing systems rely on the von Neumann architecture, where memory and processing units remain separate, creating bottlenecks that limit speed and energy efficiency. In contrast, neuromorphic processors integrate memory and computation within the same physical structure, much like synapses in the human brain. This fundamental redesign enables these systems to process information simultaneously across thousands of interconnected nodes, dramatically improving both speed and power consumption. As we stand on the cusp of this technological revolution, understanding how these brain-like computers work and their potential impact becomes crucial for anyone interested in the future of mobile technology and artificial intelligence.

Neuromorphic Computing: Mimicking Nature’s Masterpiece

What Makes Neuromorphic Chips Different?

Neuromorphic computing represents a paradigm shift in how we design and build computer processors. Traditional chips process information sequentially, following explicit programming instructions. However, neuromorphic chips operate fundamentally differently by emulating the structure and function of biological neural networks. These processors contain artificial neurons and synapses that communicate through electrical spikes, similar to how neurons fire in the human brain.

The architecture of brain-inspired processors incorporates several key features that distinguish them from conventional chips. First, they utilize parallel processing across numerous interconnected nodes, allowing multiple computations to occur simultaneously. Second, they employ event-driven processing, meaning components only activate when needed, significantly reducing power consumption. Third, these chips integrate memory with processing units, eliminating the energy-intensive data transfers that plague traditional computing systems.

The Science Behind Self-Learning Capabilities

The self-learning AI functionality of neuromorphic systems stems from their ability to adapt and rewire connections based on experience—a process called synaptic plasticity. When these brain-like computers encounter new patterns or information, they can strengthen or weaken connections between artificial neurons, effectively learning from experience without explicit programming. This learning mechanism, known as spike-timing-dependent plasticity, allows the system to recognize patterns, make predictions, and improve performance over time.

Unlike traditional machine learning algorithms that require extensive training on powerful servers, neuromorphic processors can learn incrementally and locally. This on-device learning capability means your smartphone could adapt to your unique usage patterns, recognize your voice more accurately over time, and predict your needs without transmitting personal data to cloud servers. The privacy implications alone make this technology remarkably valuable in our data-sensitive world.

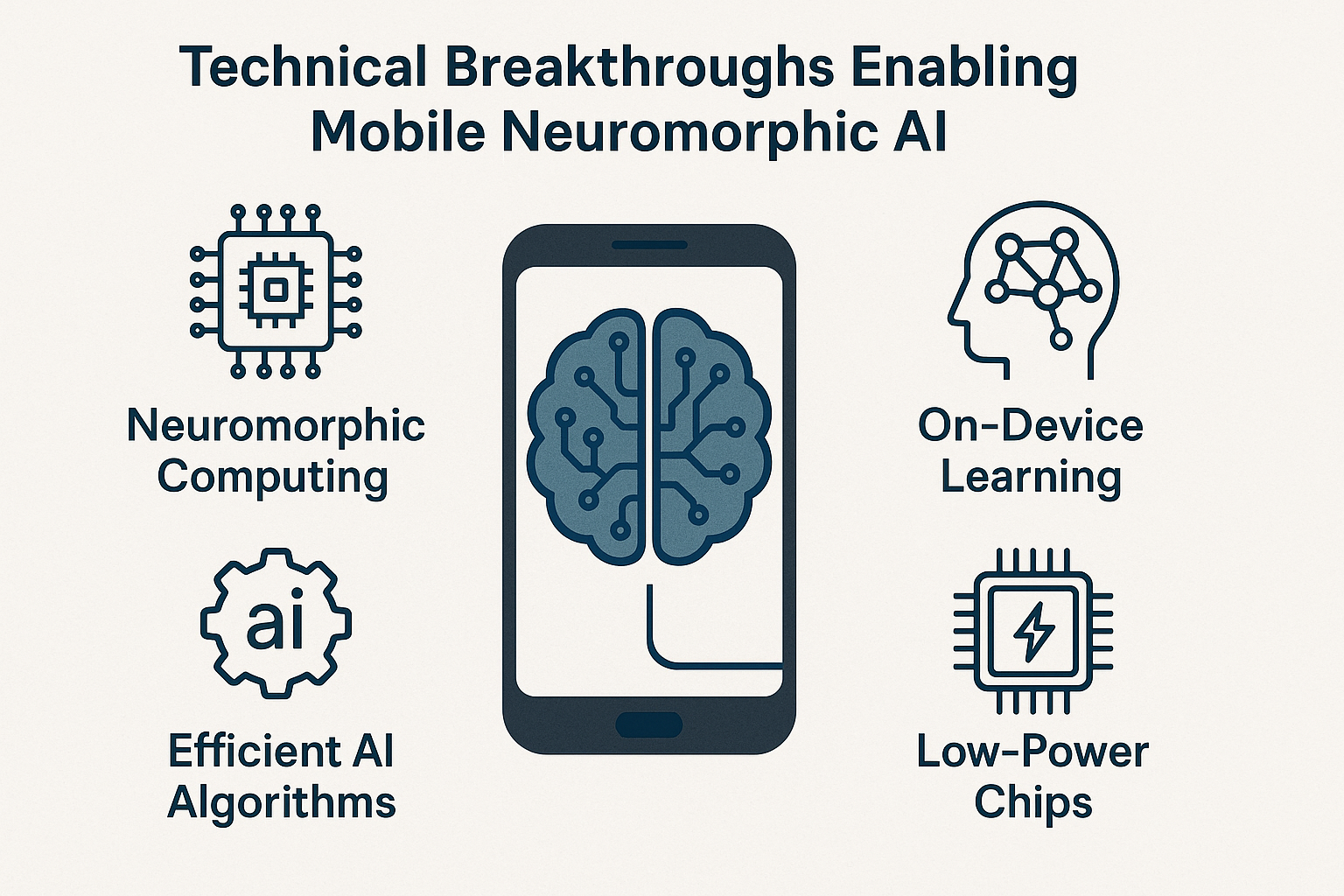

Technical Breakthroughs Enabling Mobile Neuromorphic AI

Advanced Materials and Miniaturization

Recent scientific breakthroughs have made brain-inspired computing practical for mobile devices. Researchers have developed novel materials, including memristors and phase-change materials, that can emulate synaptic behavior at microscopic scales. These components can retain memory states without continuous power, enabling neuromorphic chips to maintain learned information even when devices are powered off.

The miniaturization challenge involved creating billions of artificial synapses on chips small enough for smartphones. Scientists achieved this by leveraging advanced semiconductor manufacturing techniques, producing neuromorphic processors with power consumption measured in milliwatts rather than the watts required by traditional AI processors. This efficiency breakthrough makes it feasible to run sophisticated self-learning AI algorithms on battery-powered devices without draining power within hours.

Integration with Existing Mobile Architectures

Implementing brain-like computers into smartphones requires seamless integration with existing mobile system architectures. Engineers have developed hybrid approaches where neuromorphic processors work alongside conventional CPUs and GPUs, handling specific AI tasks while traditional components manage standard computing functions. This collaborative architecture optimizes both performance and energy efficiency.

The software ecosystem supporting neuromorphic computing has also evolved significantly. Developers have created specialized programming frameworks and neural network models optimized for spike-based processing. These tools enable app developers to harness brain-inspired processors without requiring deep expertise in neuroscience, accelerating the adoption of this transformative technology.

Real-World Applications: How Self-Learning AI Will Transform Smartphones

Enhanced Privacy and Data Security

One of the most compelling advantages of on-device self-learning AI is enhanced privacy. With neuromorphic chips processing data locally, sensitive information never leaves your device. Your voice commands, facial recognition data, and personal preferences remain securely on your smartphone rather than being transmitted to corporate servers. This brain-like computing approach addresses growing concerns about data privacy and surveillance.

Furthermore, neuromorphic processors can implement advanced security features that adapt to threats in real-time. These systems can learn normal usage patterns and detect anomalous behavior that might indicate hacking attempts or malware, providing dynamic protection that evolves with emerging threats.

Intelligent Personal Assistants That Truly Learn

Current smartphone assistants rely heavily on cloud processing, creating latency and privacy concerns. Brain-inspired computing enables truly intelligent assistants that learn your communication style, anticipate your needs, and provide personalized responses instantly. These self-learning AI assistants could understand context, remember previous conversations, and adapt their functionality based on your changing preferences—all without internet connectivity.

Revolutionary Camera and Image Processing

Neuromorphic chips excel at processing visual information, making them ideal for next-generation smartphone cameras. These brain-like computers can perform real-time scene analysis, object recognition, and image enhancement with minimal power consumption. Imagine cameras that instantly recognize and optimize settings for any scenario, track moving subjects with unprecedented accuracy, and apply intelligent enhancements that improve with every photo you take.

Extended Battery Life Through Efficient Processing

Perhaps the most immediately noticeable benefit of neuromorphic computing will be dramatically improved battery life. Because brain-inspired processors only activate components when needed and process information with remarkable efficiency, they consume a fraction of the power required by traditional AI chips. This efficiency means smartphones could run sophisticated AI applications throughout the day without significant battery drain.

Challenges and Future Development

Manufacturing and Cost Considerations

Despite tremendous progress, manufacturing neuromorphic chips at scale presents challenges. The specialized materials and novel architectures require new production techniques that are still being refined. Current brain-like computers remain more expensive to produce than conventional processors, though costs are expected to decrease as manufacturing processes mature and production volumes increase.

Software Ecosystem Development

The transition to neuromorphic computing requires extensive software development. While progress has been made, creating applications that fully leverage self-learning AI capabilities demands new programming paradigms. Developers must learn to think in terms of spiking neural networks rather than traditional algorithms, representing a significant learning curve for the software industry.

Industry Adoption Timeline

Experts predict the first commercially available smartphones featuring dedicated neuromorphic processors will emerge within the next two to three years. Initial implementations will likely focus on specific functions like camera processing or voice recognition before expanding to comprehensive brain-inspired computing systems. As the technology matures, we can expect brain-like computers to become standard components in mobile devices, fundamentally changing how we interact with smartphones.

Conclusion

The development of Brain Like Computers Bring represents one of the most significant technological advances of our generation. By bringing self-learning AI capabilities to smartphones through neuromorphic chips, scientists are creating devices that truly adapt to users rather than forcing users to adapt to technology. This brain-inspired computing revolution promises enhanced privacy, extended battery life, and unprecedented intelligence in mobile devices.

As neuromorphic processors transition from research laboratories to consumer products, they will redefine our expectations of what smartphones can accomplish. The ability to run sophisticated AI algorithms locally, learn continuously from experience, and operate with remarkable energy efficiency positions brain-like computing as the foundation for the next generation of mobile technology. While challenges remain in manufacturing, software development, and widespread adoption, the trajectory is clear: the future of smartphones is neuromorphic, intelligent, and remarkably similar to the biological masterpiece that inspired it—the human brain.

FAQs

Q: What is neuromorphic computing and how does it differ from traditional computing?

Neuromorphic computing is a computing approach that mimics the structure and function of biological neural networks. Unlike traditional computers that process information sequentially through separate memory and processing units, neuromorphic chips integrate these functions and process data in parallel, similar to how neurons communicate in the human brain. This architecture enables significantly greater energy efficiency and enables self-learning capabilities without requiring constant cloud connectivity.

Q: When will smartphones with brain-like processors be available to consumers?

Industry experts predict that smartphones incorporating dedicated neuromorphic processors will begin appearing in consumer markets within the next two to three years. Initial implementations will likely focus on specific applications such as camera processing, voice recognition, or security features before expanding to comprehensive neuromorphic computing systems. Several major technology companies are already investing heavily in this technology and developing commercial products.

Q: Will neuromorphic AI in smartphones improve privacy?

Yes, significantly. Since neuromorphic processors enable on-device learning and processing, sensitive personal data never needs to leave your smartphone. Voice commands, facial recognition data, usage patterns, and other personal information can be processed locally rather than being transmitted to corporate servers. This represents a substantial improvement in privacy compared to current AI systems that rely heavily on cloud processing.

Q: How much more energy-efficient are neuromorphic chips compared to traditional processors?

Neuromorphic processors can be hundreds to thousands of times more energy-efficient than traditional processors for AI tasks. They achieve this through event-driven processing (activating only when needed), integrated memory and processing, and parallel computation. This efficiency means sophisticated AI applications can run continuously without significantly impacting smartphone battery life, potentially extending usage time by several hours compared to current AI implementations.

Q: Can neuromorphic computing completely replace traditional smartphone processors?

Not entirely, at least not in the near term. The most likely scenario involves hybrid architectures where neuromorphic processors handle specific AI and pattern recognition tasks while traditional CPUs and GPUs manage conventional computing functions. This collaborative approach optimizes both performance and energy efficiency. However, as neuromorphic technology matures, these brain-inspired processors may eventually handle an increasingly larger share of smartphone computing tasks.